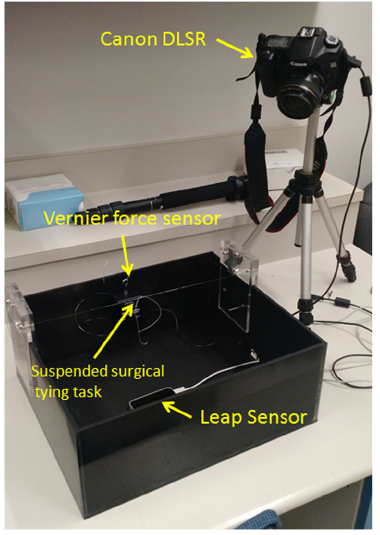

In this study, we use data captured by smart sensors to deploy a Cloud Computing Interface in the Cloud infrastructure (CCI). To achieve the benefit from processing, analyzing, evaluating and storing fine motion data. We introduce a smart sensor-based motion detection technique for objective measurement and assessment of surgical dexterity among users at different experience levels. The goal is to allow trainees to evaluate their performance based on a reference model shared through CCI, without the physical presence of an evaluating surgeon.

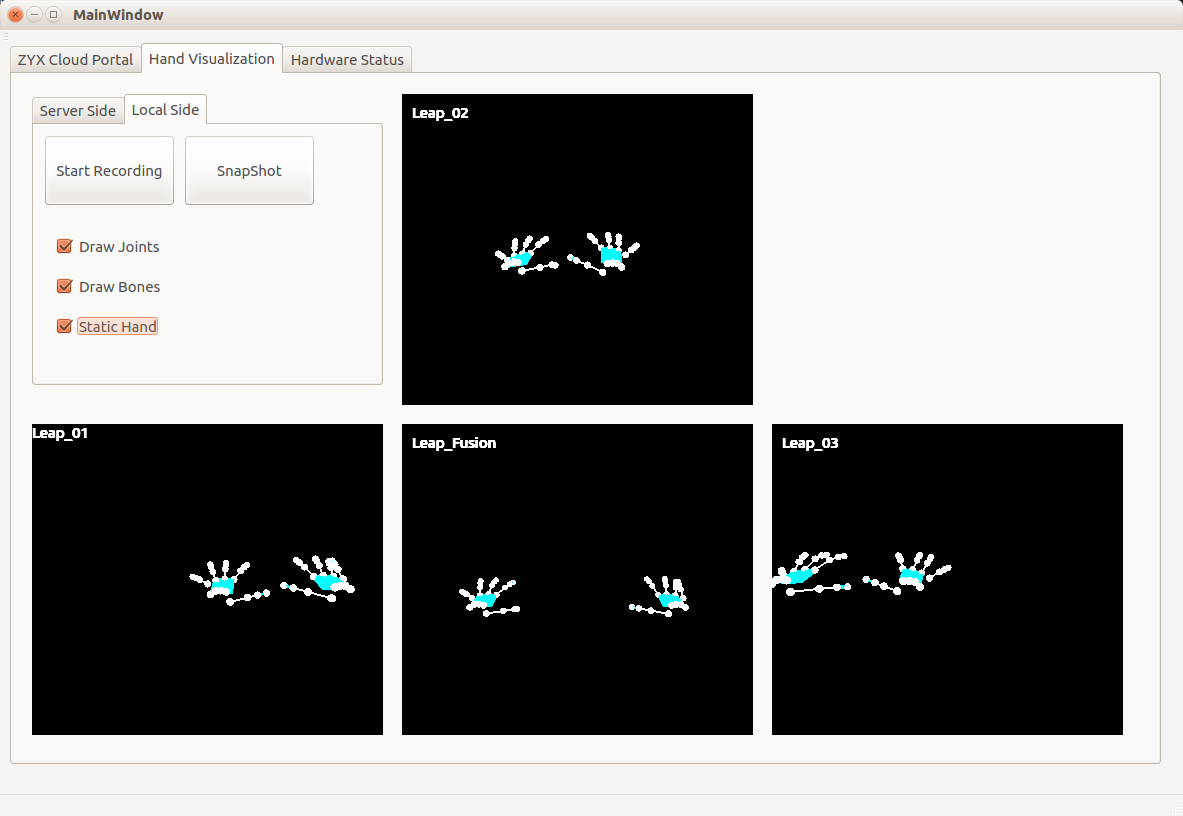

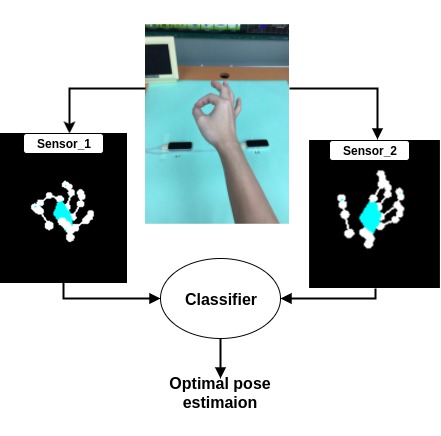

However, accurately tracking the articulation of hand poses in real-time is still an open research problem. A sensor has a limited viewing volume and there is noisy sensor data caused by the complex and fast finger movements. In this work, we also focus on improving hand pose estimation and enhancing existing single-view based tracking systems. We propose using multiple sensors and fuse the estimations from multiple sources in order to predict hand pose motion more accurately.